When using NSX-T for networking in combination with NSX ALB for load balancing in the vSphere IaaS Control Plane, the ALB Service Engines attach to each Tier-1 Router (vSphere Namespace) with one vNIC. Naturally, there’s a limit of 10 vNICs per Service Engine, meaning we can connect up to 8 Tier-1 routers to one Service Engine, as one vNIC is needed for management and another for frontend traffic.

We were curious to see how NSX ALB would handle more than 8 vSphere Namespaces in this setup. My colleague Steven Schramm and I decided to test this in our lab.

Test Setup

Our lab environment was based on the following components:

- vCenter 8.0.3 (24022515)

- ESXi 8.0.3 (24022510)

- NSX ALB 22.1.5 (22.1.5-2p6)

- NSX-T 4.1.2.4

For testing, we created 9 vSphere Namespaces, each with one workload cluster using default settings and the default VM-Class xsmall-best-effort.

Observations

We observed the behavior of NSX ALB as we created the ninth vSphere Namespace and the corresponding Workload Cluster. As expected, NSX ALB started to deploy a new Service Engine to accommodate the additional namespace.

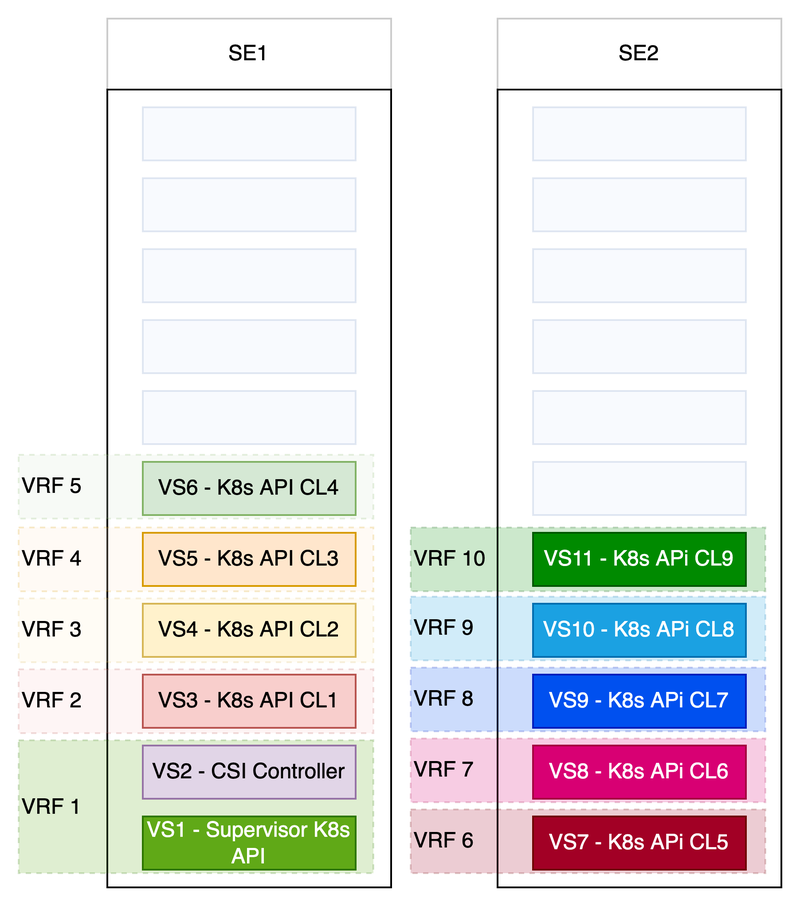

To visualize this procedure we created two drawings. The first drawing shows the number of Service Engines and the distribution of the running virtual services with nine vSphere Namespaces and one K8s Cluster per vSphere Namespace. Based on the corresponding Service Engine Group each of those Service Engines is allowed to run 11 virtual services. Further the drawing shows eleven virtual services. One virtual service for the K8s APi of the Supervisor cluster, one for the CSI controller and nine for the running K8s clusters. The virtual services for the Supervisor K8s API and the CSI controller are running in the same VRF and the different K8s clusters are running in dedicated VRFs. One VRF for each vSphere Namespace. As you can see we do have two Service Engines deployed, since the Service Engine Group is running in HA Mode N+M and one additional Service Engine is required to cover the outage of one Service Engines an still have enough capacity to maintain all running virtual Services.

Based on the maximum allowed ratio of 11 virtual services per Service Engine two Service Engines would cover the requirement to maintain all running virtual services, but this is not true with 10 VRFs and the requirement of one vNic per VRF. Still we only have two Service Engines, because the number of used vNics will not be taken in consideration for the N+M formular.

What does this mean in case of a SE failure? After one of the two SEs did fail the Controller trys to reschedule the virtual services, but is unable to get all ten VRFs/ ten vNics connected to the same SE, since one vNIC is already used for the management connection of the SE. Based on this missing capacity of available vNICs, a new SE needs to be created and one of the virtual Services is out of service until this SE is initialized. This can be prevented by increasing the valued for the SEs which should be deployed as a buffer or by reducing the amount of accepted virtual services per SE, but this does also mean you will increase your used licenses and the corresponding costs.

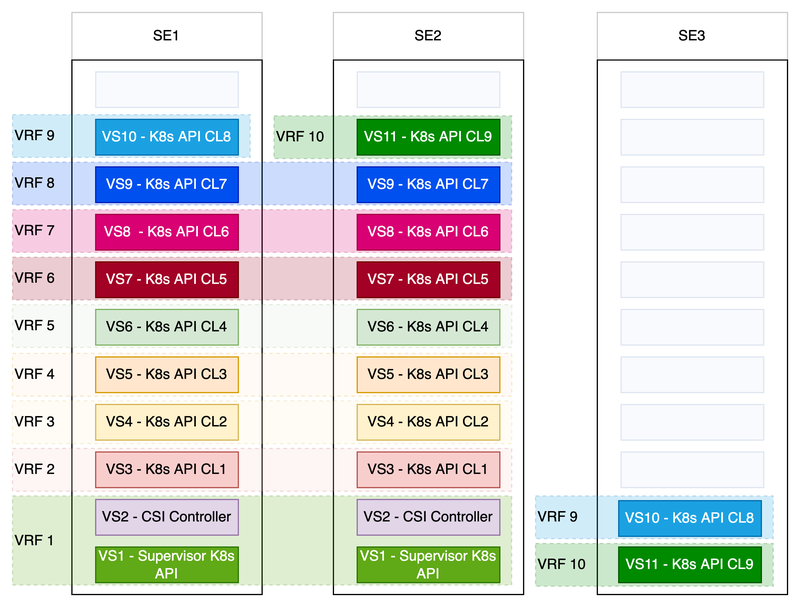

The second drawing shows the effect of further scaling. In the specific example we inreased the minimum scale per virtual service from one to two. Based on this each virtual service have to run on at least two different SEs. This will also increase the number of required service engines from two to three, but the problem regarding the capacity of available vNICs is still valid.

Conclusion

This test indicates that the limitation of vSphere Namespaces will not be reduced by the number of available vNIC interfaces per SE, but in case of an SE failure the recovery time can be much longer. This can be solved by changing the values responsible for the scaling of the number of SEs in the N+M HA mode for example, but this might have an impact on the number of licenses you will require to keep you workload running and reduce the impact of an SE failure.